What is the history of Computer Science?

The history of computer science began long before our modern discipline of computer science, usually appearing in forms like mathematics or physics. Developments in previous centuries alluded to the discipline that we now know as computer science.

What was the first computer ever made by a university?

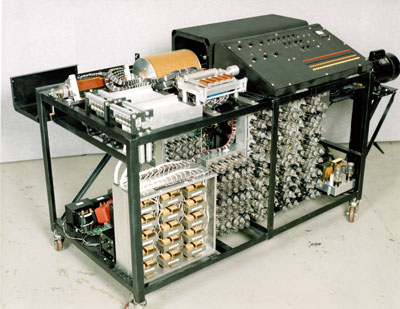

1952 ILLIAC, the first computer built and owned entirely by an educational institution, becomes operational. It was ten feet long, two feet wide, and eight and one-half feet high, contained 2,800 vacuum tubes, and weighed five tons.

When was the mathematics and computer science department established?

The department's undergraduate degree program in mathematics and computer science is established in the College of Liberal Arts and Sciences. 1966 A graduate degree program in computer science is established in the Graduate College.

When did Computer Studies become compulsory in school?

In 1981, the BBC produced a micro-computer and classroom network and Computer Studies became common for GCE O level students (11–16-year-old), and Computer Science to A level students. Its importance was recognised, and it became a compulsory part of the National Curriculum, for Key Stage 3 & 4.

When was the first computer science course?

Computer science courses of 1951 In 1947, IBM began teaching the Watson Laboratory Three-Week Course on Computing, which is seen as the first hands-on computer course. It reached more than 1600 people. The course was dispersed to IBM education centers worldwide in 1957.

Who invented computer science subject?

Charles Babbage and Ada Lovelace Charles Babbage is often regarded as one of the first pioneers of computing. Beginning in the 1810s, Babbage had a vision of mechanically computing numbers and tables. Putting this into reality, Babbage designed a calculator to compute numbers up to 8 decimal points long.

What year is computer science?

A three-year undergraduate program in Computer Science is offered. A field of study in computer science is concerned with computing, programming languages, databases, networking, software engineering, and artificial intelligence.

What is computer science course?

Computer science, often referred to as CS, is a broad field encompassing the study of computer systems, computational thinking and theory, and the design of software programs that harness the power of this hardware to process data.

Why is it called computer science?

Computer Science is the study of computers and computational systems. Unlike electrical and computer engineers, computer scientists deal mostly with software and software systems; this includes their theory, design, development, and application.

Is computer science hard?

Earning a computer science degree has been known to entail a more intense workload than you might experience with other majors because there are many foundational concepts about computer software, hardware, and theory to learn. Part of that learning may involve a lot of practice, typically completed on your own time.

Which computer degree is best?

7 Popular Computer Degrees for IT JobsInformation Technology and Information Systems.Computer Science.Information Science.Systems & Network Administration.Software Engineering.Computer Engineering.Cybersecurity.

How long is computer science course?

four yearsComputer Science can be studied for three years (BA) or four years (Master of Computer Science). The fourth year allows the study of advanced topics and an in-depth research project.

When was computer science first introduced?

The world's first computer science degree program, the Cambridge Diploma in Computer Science, began at the University of Cambridge Computer Laboratory in 1953 . The first computer science department in the United States was formed at Purdue University in 1962.

What is computer science?

Computer science is the study of algorithmic processes, computational machines and computation itself. As a discipline, computer science spans a range ...

What can be automated?

According to Peter Denning, the fundamental question underlying computer science is, "What can be automated?" Theory of computation is focused on answering fundamental questions about what can be computed and what amount of resources are required to perform those computations. In an effort to answer the first question, computability theory examines which computational problems are solvable on various theoretical models of computation. The second question is addressed by computational complexity theory, which studies the time and space costs associated with different approaches to solving a multitude of computational problems.

Why do universities use the term "datalogy"?

Danish scientist Peter Naur suggested the term datalogy, to reflect the fact that the scientific discipline revolves around data and data treatment, while not necessarily involving computers.

What is the purpose of computer security?

Computer security is a branch of computer technology with the objective of protecting information from unauthorized access, disruption, or modification while maintaining the accessibility and usability of the system for its intended users . Cryptography is the practice and study of hiding (encryption) and therefore deciphering (decryption) information. Modern cryptography is largely related to computer science, for many encryption and decryption algorithms are based on their computational complexity.

What is programming language theory?

Programming language theory is a branch of computer science that deals with the design, implementation, analysis, characterization, and classification of programming languages and their individual features.

What is the main article in computer science?

Philosophy. Main article: Philosophy of computer science . A number of computer scientists have argued for the distinction of three separate paradigms in computer science. Peter Wegner argued that those paradigms are science, technology, and mathematics.

Who invented the computer?

Computing took another leap in 1843, when English mathematician Ada Lovelace wrote the first computer algorithm, in collaboration with Charles Babbage, who devised a theory of the first programmable computer.

Who created the world wide web?

On the connectivity side, Tim Berners-Lee created the World Wide Web, and Marc Andreessen built a browser, and that’s how we came to live in a world where our glasses can tell us what we’re looking at.

Who invented the transistor?

But the modern computing-machine era began with Alan Turing’ s conception of the Turing Machine, and three Bell Labs scientists invention of the transistor, which made modern-style computing possible, and landed them the 1956 Nobel Prize in Physics.

What was the first computer science program?

The first computer science departments in American colleges came along in the early 1960s, starting at Purdue University.

Who is the founder of computer science?

One of them, IBM Chief Executive Thomas J. Watson Sr. , the son of a farmer and lumber dealer who grew up near remote Painted Post, NY, only graduated from high school.

Why did IBM start the Manhattan Systems Research Institute?

Because there were so few university courses in computing in the early days, IBM set up the Manhattan Systems Research Institute in 1960 to train its own employees. It was the first program of its kind in the computer industry.

Why did IBM run executive training classes?

In the mid-1930s, General Electric suggested that IBM run customer executive training classes to explain what IBM products could do for clients. In response, IBM created a tabulating knowledge-sharing program.

When did IBM start teaching Watson?

Watson Laboratory courses are listed in this 1951 Columbia University course catalog. In 1947, IBM began teaching the Watson Laboratory Three-Week Course on Computing, which is seen as the first hands-on computer course. It reached more than 1600 people. The course was dispersed to IBM education centers worldwide in 1957. By 1973, IBM had about 100 educational centers, and in 1984, was conducting 1.5 million student-days of teaching.

How many people did the IBM course reach?

It reached more than 1600 people. The course was dispersed to IBM education centers worldwide in 1957. By 1973, IBM had about 100 educational centers, and in 1984, was conducting 1.5 million student-days of teaching.

When did IBM start the Watson Laboratory?

At the time, few scientists of any discipline understood how to use computers. So in 1947 , IBM created the “Watson Laboratory Three-Week Course on Computing” and, over time, thousands of academics and high school science and math teachers took the course.

Milestones in the history of computer science

In the 1840s, Ada Lovelace became known as the first computer programmer when she described an operational sequence for machine-based problem solving. Since then, computer technology has taken off.

1890: Herman Hollerith designs a punch card system to calculate the US Census

The US Census had to collect records from millions of Americans. To manage all that data, Herman Hollerith developed a new system to crunch the numbers. His punch card system became an early predecessor of the same method computers use. Hollerith used electricity to tabulate the census numbers.

1936: Alan Turing develops the Turing Machine

Computational philosopher Alan Turing came up with a new device in 1936: the Turing Machine. The computational device, which Turing called an "automatic machine," calculated numbers. In doing so, Turing helped found computational science and the field of theoretical computer science.

1939: Hewlett-Packard is founded

Hewlett-Packard had humble beginnings in 1939 when friends David Packard and William Hewlett decided the order of their names in the company brand with a coin toss. The company originally created an oscillator machine used for Disney's Fantasia. Later, it turned into a printing and computing powerhouse.

1941: Konrad Zuse assembles the Z3 electronic computer

World War II represented a major leap forward for computing technology. Around the world, countries invested money in developing computing machines. In Germany, Konrad Zuse created the Z3 electronic computer. It was the first programmable computing machine ever built. The Z3 could store 64 numbers in its memory.

1943: John Mauchly and J. Presper Eckert build the Electronic Numerical Integrator and Calculator (ENIAC)

The ENIAC computer was the size of a large room -- and it required programmers to connect wires manually to run calculations. The ENIAC boasted 18,000 vacuum tubes and 6,000 switches. The US hoped to use the machine to determine rocket trajectories during the War, but the 30-ton machine was so enormous that it took until 1945 to boot it up.

1947: Bell Telephone Laboratories invents transistors

Transistors magnify the power of electronics. And they came out of the Bell Telephone laboratories in 1947. Three physicians developed the new technology: William Shockley, John Bardeen, and Walter Brattain. The men received the Nobel Prize for their invention, which changed the course of the electronics industry.

Who invented the computer?

1800s. Charles Babbage, the father of computing, pioneered the concept of a programmable computer. Babbage designed of the first mechanical computer. Its architecture was like the architecture of the modern computer — he called his mechanical computer the Analytical Engine.

Who was the first computer programmer?

When he designed it was programmed by punch-cards, and the numeral system was decimal. Working with Charles Baggage on his Analytical Machine was Ada Lovelace. She was the first computer programmer. In her notes on the engine she wrote the first algorithm that ran on any machine.

What are the three theories of computation?

Then came Ramon Llull, who is considered the pioneer of computation theory. He started working on a new system of logic in his work — Ars magna. Ars magna influenced the development of a couple subfields within mathematics. Specifically: 1 The idea of a formal language 2 The idea of a logical rule 3 The computation of combinations 4 The use of binary and ternary relations 5 The use of symbols for variables 6 The idea of substation for a variable 7 The use of a machine for logic

Which logic system did Boole use?

Most logic within electronics now stands on top of his work. Boole also utilized the binary system in his work on algebraic system of logic. This work also inspired Claude Shannon to use the binary system in his work. (fun fact: the boolean data-type is named after him!)

Who is the founder of the modern computer?

Then came a man most computer scientists should know about — George Boole. He was the pioneer of what we call Boolean Logic, which as we know is the basis of the modern digital computer. Boolean Logic is also the basis for digital logic and digital electronics. Most logic within electronics now stands on top of his work.

What was Euclid's philosophy in 300 BC?

300 BC. In 300 BC Euclid writes a series of 13 books called Elements. The definitions, theorems, and proofs covered in the books became a model for formal reasoning. Elements was instrumental in the development of logic & modern science.

What was the job of computers in the 1920s?

Computers in those days were people whose job it was to calculate various equations. Many thousands were employed by the government and businesses.

Who invented the algorithm?

Algorithms are in etymology are derived from algebra, which was developed in the seventh century by an Indian mathematician, Brahmagupta. He introduced zero as a place holder and decimal digits.

What is the name of the computer used in the Korean War?

Upper Left: the Polish analog computer ELWAT. Bottom Left: a typical student slide rule. Bottom Right: the Norden bombsight, used by the US military during World War II, the Korean War, and the Vietnam War. It usage includes the dropping of the atomic bombs on Japan.

Who invented the analytical engine?

The analytical engine is a key step in the formation of the modern computer. It was designed by Charles Babbage starting in 1837, but he worked on it until his death in 1871. Because of legal, political and monetary issues the machine was never built.

Is the home computer revolution still happening?

Three and a half decades later, the home computer revolution is still occurring. Microsoft still dominates the market, but Apple, with the introduction of the new iMac, as well as branches of other innovating technology (the iPod) has made some ground, as have independent free source operating systems like Linux and Solaris.

Where did the word "calculate" come from?

The word "calculate" is said to be derived from the Latin word "calcis", meaning limestone, because limestone was used in the first sand table. The form the pebble container should take for handy calculations kept many of the best minds of the Stone Age busy for centuries.

Who invented logarithms?

The development of logarithms by, the Scottish mathematician, John Napier (1550-1617) in 1614, stimulated the invention the various devices that substituted the addition of logarithms for multiplication. Napier played a key role in the history of computing.

When did the shift key typewriter come out?

The first shift-key typewriter (in which uppercase and lowercase letters are made available on the same key) didn't appear on the market until 1878, and it was quickly challenged by another flavor which contained twice the number of keys, one for every uppercase and lowercase character.

History

- The earliest foundations of what would become computer science predate the invention of the modern digital computer. Machines for calculating fixed numerical tasks such as the abacus have existed since antiquity, aiding in computations such as multiplication and division. Algorithmsfor performing computations have existed since antiquity, even befo...

Etymology

- Although first proposed in 1956, the term "computer science" appears in a 1959 article in Communications of the ACM,in which Louis Fein argues for the creation of a Graduate School in Computer Sciences analogous to the creation of Harvard Business School in 1921, justifying the name by arguing that, like management science, the subject is applied and interdisciplinary in na…

Philosophy

- Epistemology of computer science

Despite the word "science" in its name, there is debate over whether or not computer science is a discipline of science, mathematics, or engineering. Allen Newell and Herbert A. Simonargued in 1975, It has since been argued that computer science can be classified as an empirical science … - Paradigms of computer science

A number of computer scientists have argued for the distinction of three separate paradigms in computer science. Peter Wegner argued that those paradigms are science, technology, and mathematics. Peter Denning's working group argued that they are theory, abstraction (modeling)…

Fields

- As a discipline, computer science spans a range of topics from theoretical studies of algorithms and the limits of computation to the practical issues of implementing computing systems in hardware and software.CSAB, formerly called Computing Sciences Accreditation Board—which is made up of representatives of the Association for Computing Machinery (ACM), and the IEEE Co…

Discoveries

Programming Paradigms

- Programming languages can be used to accomplish different tasks in different ways. Common programming paradigms include: 1. Functional programming, a style of building the structure and elements of computer programs that treats computation as the evaluation of mathematical functions and avoids state and mutable data. It is a declarative programming paradigm, which …

Academia

- Conferences are important events for computer science research. During these conferences, researchers from the public and private sectors present their recent work and meet. Unlike in most other academic fields, in computer science, the prestige of conference papers is greater than that of journal publications.One proposed explanation for this is the quick development of t…

Education

- Computer Science, known by its near synonyms, Computing, Computer Studies, has been taught in UK schools since the days of batch processing, mark sensitive cards and paper tape but usually to a select few students. In 1981, the BBC produced a micro-computer and classroom network and Computer Studies became common for GCE O level students (11–16-year-old), and Comput…

External Links

- Computer science at Curlie

- Scholarly Societies in Computer Science Archived June 23, 2011, at the Wayback Machine

- What is Computer Science?

- Best Papers Awards in Computer Science since 1996

Popular Posts:

- 1. what do i do with my certificate from a drivers course

- 2. who takes care od the golf course

- 3. the modern capitalist society is an example of which type of society? course hero

- 4. what is the zip code for bay valley golf course in bay city michigan

- 5. what does a course load for law school look like

- 6. how much does it cost to take a course at houston community college

- 7. which of the following is the best way to deliver presentations with authenticity course hero

- 8. if you have problems accessing course video lectures, what should you do?

- 9. which of the following explains why hispanics are overrepresented in crime statistics? course hero

- 10. how to add an item under course resources in blackboard